In

our pilot review, we draped a slim, versatile electrode array above the area of the volunteer’s mind. The electrodes recorded neural alerts and despatched them to a speech decoder, which translated the indicators into the phrases the gentleman intended to say. It was the to start with time a paralyzed man or woman who couldn’t talk had made use of neurotechnology to broadcast complete words—not just letters—from the brain.

That trial was the end result of much more than a 10 years of exploration on the fundamental brain mechanisms that govern speech, and we’re enormously happy of what we’ve completed so far. But we’re just getting begun.

My lab at UCSF is functioning with colleagues around the planet to make this technological innovation risk-free, steady, and dependable more than enough for each day use at household. We’re also working to improve the system’s functionality so it will be worth the exertion.

How neuroprosthetics perform

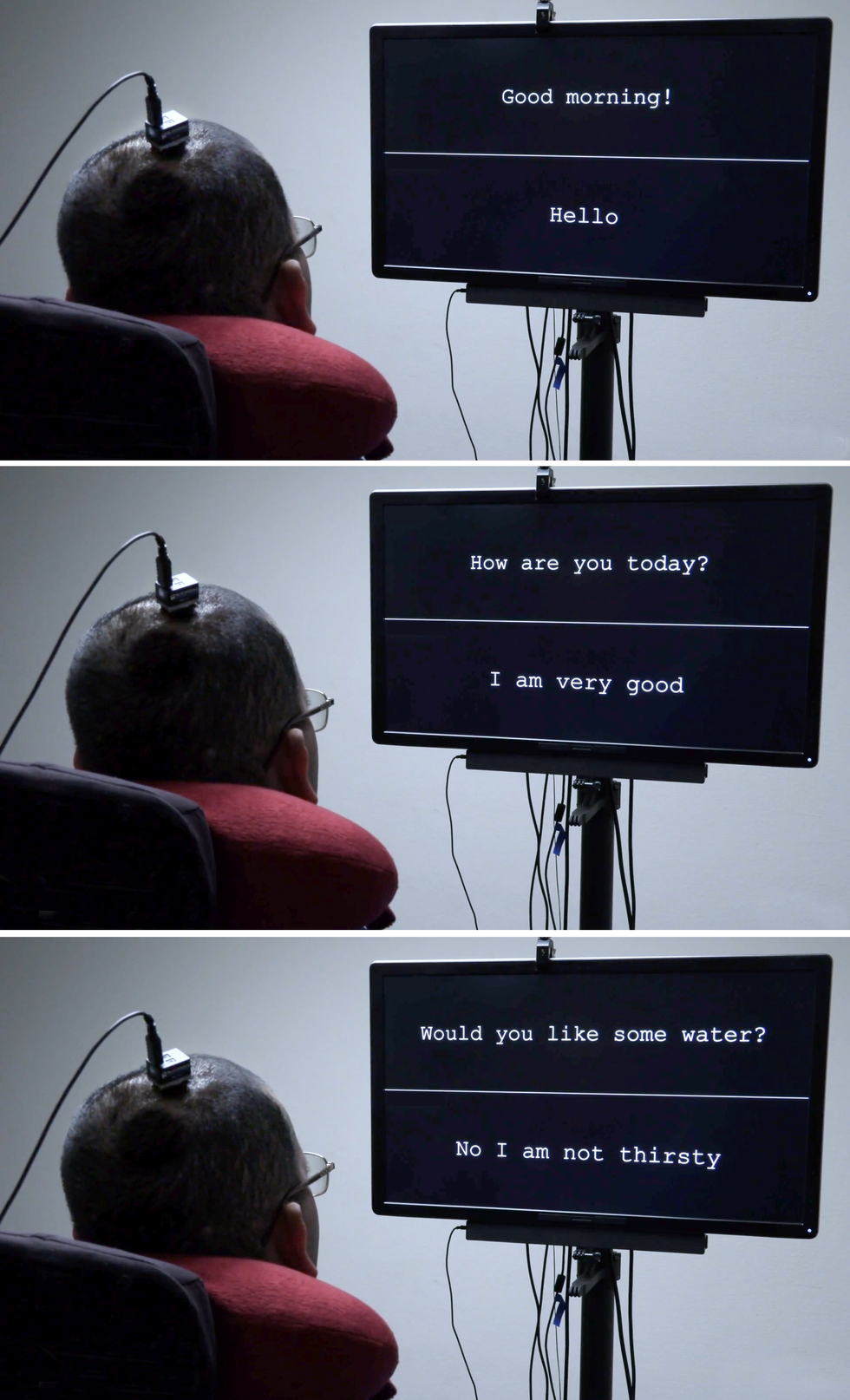

The very first version of the mind-computer interface gave the volunteer a vocabulary of 50 functional words. University of California, San Francisco

The very first version of the mind-computer interface gave the volunteer a vocabulary of 50 functional words. University of California, San Francisco

Neuroprosthetics have come a very long way in the past two decades. Prosthetic implants for hearing have superior the furthest, with types that interface with the

cochlear nerve of the internal ear or instantly into the auditory mind stem. There is also significant exploration on retinal and brain implants for vision, as effectively as efforts to give people with prosthetic hands a feeling of contact. All of these sensory prosthetics choose information and facts from the exterior world and convert it into electrical indicators that feed into the brain’s processing facilities.

The opposite sort of neuroprosthetic information the electrical exercise of the mind and converts it into alerts that handle some thing in the exterior globe, these types of as a

robotic arm, a video clip-match controller, or a cursor on a computer system display screen. That very last manage modality has been made use of by teams these kinds of as the BrainGate consortium to enable paralyzed people today to form words—sometimes just one letter at a time, occasionally utilizing an autocomplete functionality to pace up the procedure.

For that typing-by-mind functionality, an implant is ordinarily put in the motor cortex, the element of the mind that controls movement. Then the user imagines specific physical actions to regulate a cursor that moves over a digital keyboard. A further strategy, pioneered by some of my collaborators in a

2021 paper, had just one consumer visualize that he was holding a pen to paper and was writing letters, making signals in the motor cortex that were being translated into textual content. That method established a new record for speed, enabling the volunteer to publish about 18 text for every minute.

In my lab’s research, we’ve taken a a lot more bold method. Alternatively of decoding a user’s intent to go a cursor or a pen, we decode the intent to command the vocal tract, comprising dozens of muscular tissues governing the larynx (frequently called the voice box), the tongue, and the lips.

The seemingly uncomplicated conversational set up for the paralyzed guy [in pink shirt] is enabled by each sophisticated neurotech components and equipment-understanding techniques that decode his mind alerts. University of California, San Francisco

The seemingly uncomplicated conversational set up for the paralyzed guy [in pink shirt] is enabled by each sophisticated neurotech components and equipment-understanding techniques that decode his mind alerts. University of California, San Francisco

I began operating in this area additional than 10 decades ago. As a neurosurgeon, I would frequently see patients with extreme injuries that left them not able to speak. To my shock, in quite a few circumstances the areas of brain injuries did not match up with the syndromes I figured out about in professional medical faculty, and I realized that we continue to have a whole lot to study about how language is processed in the brain. I made the decision to study the fundamental neurobiology of language and, if achievable, to establish a brain-equipment interface (BMI) to restore interaction for persons who have misplaced it. In addition to my neurosurgical qualifications, my group has skills in linguistics, electrical engineering, pc science, bioengineering, and medication. Our ongoing clinical demo is testing equally components and program to examine the boundaries of our BMI and figure out what variety of speech we can restore to people today.

The muscle tissues concerned in speech

Speech is a single of the behaviors that

sets people apart. A good deal of other species vocalize, but only individuals mix a set of seems in myriad various approaches to signify the environment all-around them. It is also an terribly intricate motor act—some industry experts feel it is the most intricate motor motion that people today execute. Speaking is a merchandise of modulated air movement by the vocal tract with each and every utterance we condition the breath by generating audible vibrations in our laryngeal vocal folds and switching the condition of the lips, jaw, and tongue.

Several of the muscle mass of the vocal tract are very as opposed to the joint-dependent muscle tissue these kinds of as people in the arms and legs, which can move in only a couple prescribed ways. For instance, the muscle that controls the lips is a sphincter, when the muscular tissues that make up the tongue are ruled far more by hydraulics—the tongue is mostly composed of a mounted quantity of muscular tissue, so going just one element of the tongue alterations its condition elsewhere. The physics governing the actions of this sort of muscle tissues is fully distinctive from that of the biceps or hamstrings.

Since there are so many muscle groups included and they every single have so quite a few degrees of flexibility, there’s primarily an infinite range of possible configurations. But when folks communicate, it turns out they use a comparatively little set of core movements (which differ relatively in unique languages). For instance, when English speakers make the “d” seem, they place their tongues powering their enamel when they make the “k” seem, the backs of their tongues go up to touch the ceiling of the back again of the mouth. Handful of folks are mindful of the exact, intricate, and coordinated muscle mass steps needed to say the easiest term.

Team member David Moses seems at a readout of the patient’s mind waves [left screen] and a screen of the decoding system’s exercise [right screen].College of California, San Francisco

Team member David Moses seems at a readout of the patient’s mind waves [left screen] and a screen of the decoding system’s exercise [right screen].College of California, San Francisco

My exploration team focuses on the elements of the brain’s motor cortex that mail motion instructions to the muscle tissue of the deal with, throat, mouth, and tongue. People mind regions are multitaskers: They deal with muscle actions that deliver speech and also the movements of those people very same muscle groups for swallowing, smiling, and kissing.

Researching the neural action of individuals areas in a useful way needs each spatial resolution on the scale of millimeters and temporal resolution on the scale of milliseconds. Historically, noninvasive imaging programs have been capable to deliver a single or the other, but not each. When we began this research, we discovered remarkably small info on how brain activity patterns ended up affiliated with even the most straightforward elements of speech: phonemes and syllables.

Below we owe a credit card debt of gratitude to our volunteers. At the UCSF epilepsy centre, individuals getting ready for operation commonly have electrodes surgically placed about the surfaces of their brains for numerous days so we can map the areas included when they have seizures. In the course of those several times of wired-up downtime, quite a few sufferers volunteer for neurological investigate experiments that make use of the electrode recordings from their brains. My group asked sufferers to let us analyze their styles of neural exercise while they spoke terms.

The components concerned is termed

electrocorticography (ECoG). The electrodes in an ECoG system never penetrate the mind but lie on the floor of it. Our arrays can include many hundred electrode sensors, each and every of which information from thousands of neurons. So considerably, we’ve utilised an array with 256 channels. Our intention in those people early scientific tests was to learn the designs of cortical activity when people today converse basic syllables. We questioned volunteers to say distinct sounds and words and phrases though we recorded their neural styles and tracked the movements of their tongues and mouths. Occasionally we did so by acquiring them use colored encounter paint and utilizing a computer system-eyesight process to extract the kinematic gestures other periods we used an ultrasound equipment positioned below the patients’ jaws to impression their moving tongues.

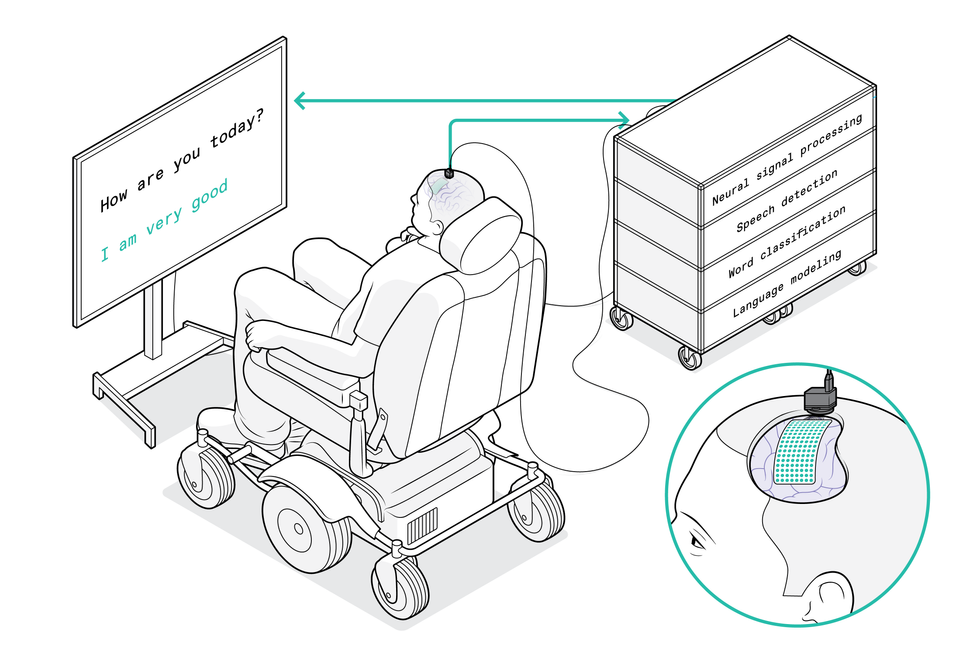

The process commences with a adaptable electrode array which is draped about the patient’s mind to decide up alerts from the motor cortex. The array especially captures movement commands intended for the patient’s vocal tract. A port affixed to the cranium guides the wires that go to the pc technique, which decodes the mind indicators and interprets them into the words that the client wants to say. His responses then show up on the screen screen.Chris Philpot

The process commences with a adaptable electrode array which is draped about the patient’s mind to decide up alerts from the motor cortex. The array especially captures movement commands intended for the patient’s vocal tract. A port affixed to the cranium guides the wires that go to the pc technique, which decodes the mind indicators and interprets them into the words that the client wants to say. His responses then show up on the screen screen.Chris Philpot

We applied these units to match neural styles to actions of the vocal tract. At initially we experienced a ton of concerns about the neural code. A person risk was that neural exercise encoded directions for particular muscle tissue, and the brain basically turned these muscle groups on and off as if pressing keys on a keyboard. Yet another thought was that the code determined the velocity of the muscle mass contractions. But a further was that neural action corresponded with coordinated styles of muscle mass contractions applied to produce a specific sound. (For case in point, to make the “aaah” sound, equally the tongue and the jaw require to fall.) What we identified was that there is a map of representations that controls distinct pieces of the vocal tract, and that together the different brain spots mix in a coordinated manner to give rise to fluent speech.

The part of AI in today’s neurotech

Our get the job done depends on the advances in synthetic intelligence more than the earlier ten years. We can feed the facts we gathered about both equally neural activity and the kinematics of speech into a neural community, then permit the equipment-discovering algorithm find patterns in the associations concerning the two knowledge sets. It was attainable to make connections between neural exercise and created speech, and to use this design to make computer system-created speech or text. But this technique couldn’t train an algorithm for paralyzed people today because we’d absence 50 percent of the data: We’d have the neural patterns, but nothing at all about the corresponding muscle mass actions.

The smarter way to use device studying, we realized, was to split the problem into two methods. Initial, the decoder translates alerts from the mind into meant movements of muscles in the vocal tract, then it interprets those intended actions into synthesized speech or text.

We get in touch with this a biomimetic technique because it copies biology in the human physique, neural exercise is instantly dependable for the vocal tract’s movements and is only indirectly liable for the sounds produced. A large advantage of this approach will come in the education of the decoder for that next step of translating muscle movements into appears. Simply because individuals interactions amongst vocal tract movements and seem are pretty universal, we have been equipped to practice the decoder on significant data sets derived from people today who weren’t paralyzed.

A medical demo to take a look at our speech neuroprosthetic

The upcoming massive obstacle was to deliver the technologies to the individuals who could genuinely profit from it.

The National Institutes of Well being (NIH) is funding

our pilot demo, which began in 2021. We now have two paralyzed volunteers with implanted ECoG arrays, and we hope to enroll additional in the coming yrs. The key target is to improve their communication, and we’re measuring performance in conditions of words per moment. An regular grownup typing on a comprehensive keyboard can form 40 words and phrases per minute, with the fastest typists achieving speeds of a lot more than 80 words for every minute.

Edward Chang was motivated to acquire a mind-to-speech system by the patients he encountered in his neurosurgery follow. Barbara Ries

Edward Chang was motivated to acquire a mind-to-speech system by the patients he encountered in his neurosurgery follow. Barbara Ries

We consider that tapping into the speech process can deliver even much better effects. Human speech is a lot more rapidly than typing: An English speaker can easily say 150 phrases in a minute. We’d like to enable paralyzed people to talk at a level of 100 phrases for every moment. We have a good deal of operate to do to access that intention, but we assume our solution will make it a feasible target.

The implant treatment is regime. Initial the surgeon removes a smaller portion of the skull up coming, the adaptable ECoG array is gently positioned throughout the surface area of the cortex. Then a modest port is fixed to the cranium bone and exits through a separate opening in the scalp. We presently have to have that port, which attaches to exterior wires to transmit information from the electrodes, but we hope to make the method wireless in the upcoming.

We’ve considered working with penetrating microelectrodes, due to the fact they can file from smaller neural populations and may perhaps for that reason give a lot more element about neural action. But the present-day components isn’t as strong and secure as ECoG for medical applications, specially above several years.

A different consideration is that penetrating electrodes normally call for day-to-day recalibration to flip the neural alerts into clear instructions, and investigate on neural devices has demonstrated that speed of setup and general performance trustworthiness are essential to having folks to use the technology. Which is why we have prioritized security in

building a “plug and play” procedure for prolonged-phrase use. We conducted a examine wanting at the variability of a volunteer’s neural signals in excess of time and found that the decoder performed greater if it employed facts designs across many periods and various times. In device-understanding conditions, we say that the decoder’s “weights” carried around, generating consolidated neural alerts.

College of California, San Francisco

Since our paralyzed volunteers just can’t discuss when we observe their brain patterns, we questioned our initial volunteer to consider two various techniques. He commenced with a checklist of 50 words and phrases that are handy for every day life, such as “hungry,” “thirsty,” “please,” “help,” and “computer.” Through 48 classes around various months, we at times requested him to just envision stating each individual of the phrases on the checklist, and from time to time questioned him to overtly

attempt to say them. We uncovered that attempts to communicate produced clearer mind signals and have been adequate to coach the decoding algorithm. Then the volunteer could use those people terms from the checklist to produce sentences of his own deciding on, these as “No I am not thirsty.”

We’re now pushing to increase to a broader vocabulary. To make that do the job, we want to continue to increase the present-day algorithms and interfaces, but I am confident individuals advancements will happen in the coming months and a long time. Now that the evidence of theory has been recognized, the aim is optimization. We can concentration on making our system quicker, much more correct, and—most important— safer and far more trustworthy. Points should go promptly now.

In all probability the most important breakthroughs will come if we can get a greater knowing of the brain devices we’re trying to decode, and how paralysis alters their action. We’ve come to recognize that the neural designs of a paralyzed human being who can not send commands to the muscle tissues of their vocal tract are really diverse from all those of an epilepsy client who can. We’re trying an ambitious feat of BMI engineering though there is even now a lot to discover about the underlying neuroscience. We believe that it will all arrive jointly to give our patients their voices back again.

From Your Website Articles or blog posts

Linked Articles All-around the World-wide-web